Adding A Gentle Touch To Prosthetic Limbs With Somatosensory Stimulation

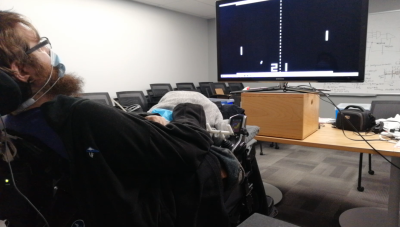

When Nathan Copeland suffered a car accident in 2004, damage to his spinal cord at the C5/C6 level resulted in tetraplegic paralysis. This left him initially at the age of 18 years old to consider a life without the use of his arms or legs, until he got selected in 2014 for a study at the University of Pittsburgh involving the controlling of a robotic limb using nothing but one’s mind and a BCI.

While this approach, as replicated in various other studies, works well enough for simple tasks, it comes with the major caveat that while it’s possible to control this robotic limb, there is no feedback from it. Normally when we try to for example grab an object with our hand, we are aware of the motion of our arm and hand, until the moment when our fingers touch the object which we’re reaching for.

In the case of these robotic limbs, the only form of feedback was of the visual type, where the user had to look at the arm and correct its action based on the observation of its position. Obviously this is far from ideal, which is why Nathan hadn’t just been implanted with Utah arrays that read out his motor cortex, but also arrays which connected to his somatosensory cortex.

As covered in a paper by Flesher et al. in Nature, by stimulating the somatosensory cortex, Nathan has over the past few years regained a large part of the sensation in his arm and hand back, even if they’re now a robotic limb. This raises the question of how complicated this approach is, and whether we can expect it to become a common feature of prosthetic limbs before long.

Please Plug In The BCI To Continue

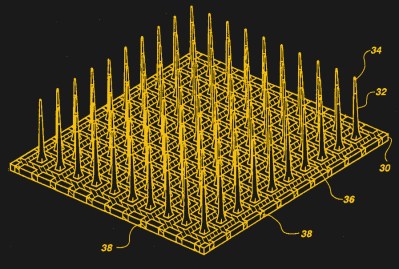

At the core of these brain-controlled prosthetics we find the brain-computer interface (BCI). This is essentially the interface between the brain’s neurons and the electronics which interact with these neurons, either by reading the signals captured by the sensor device implanted into the brain, or by sending signals to it. Here the most commonly used type is the so-called Utah array. This is a microelectrode array featuring many small electrodes, each of which captures part of the electrical activity in the brain, or allows for the stimulation of neurons near that specific electrode.

While it’s possible to capture the brain’s activity through the skull without opening it, the general rule here is that for the best signal resolution one wants to get as close to the action as possible. A second rule is that more and smaller electrodes (generally) equates a better signal and resolution, giving us a better idea of what’s happening in a specific part of the brain.

Obviously, just jamming some electrodes into the brain and hooking it up to some electronics isn’t likely to produce very meaningful results: to these tiny electrodes the brain is a very big world, with countless electrical impulses zipping to and fro. A crucial step is to discern the signal in these patterns of noise that are being read to be able to tell when e.g. the patient desires to lift the prosthetic arm, or to open or close the hand.

Similarly, while one can totally inject current into random parts of the brain, the general goal is to elicit a specific sensation instead of hitting whatever neurons happen to be nearby. In the case of Nathan, the goal was to ideally link for example pressure exerted on the left finger of the robotic hand to the ‘left finger’ area in the somatosensory region of Nathan’s brain.

An Imperfect Approximation

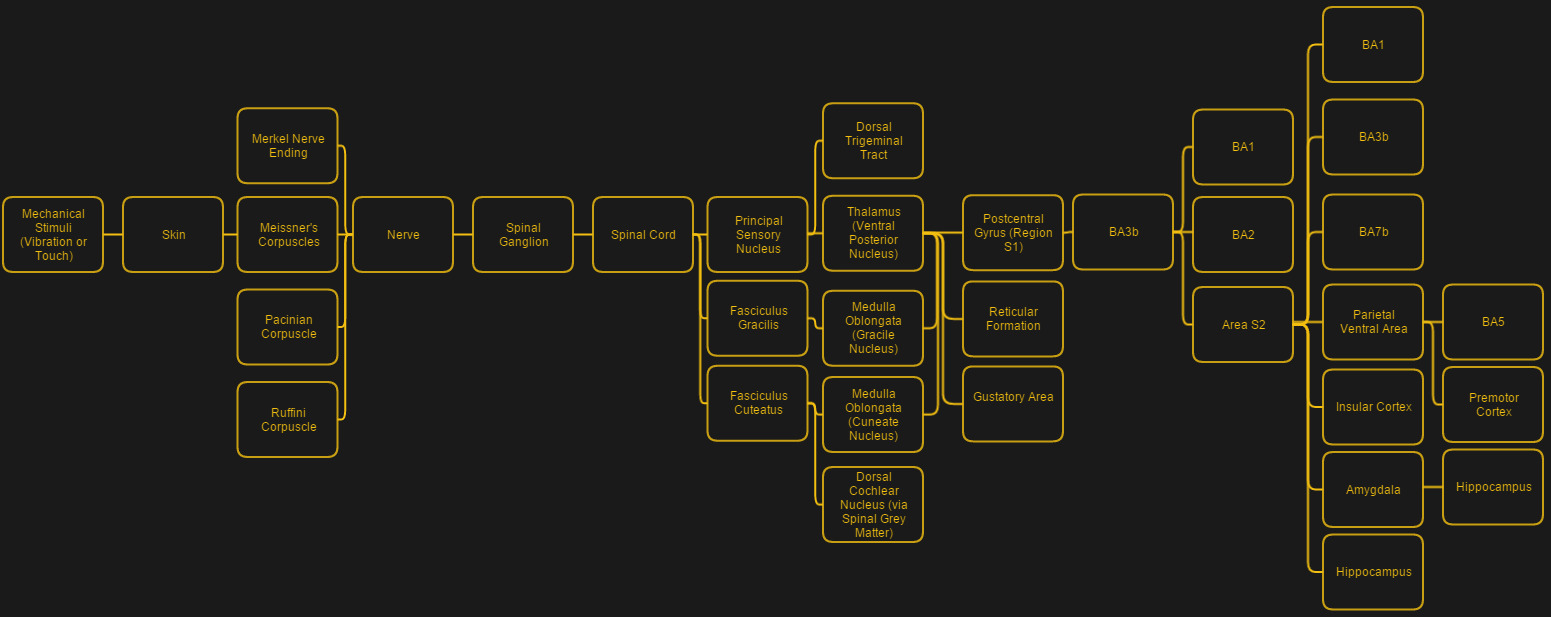

When we talk about ‘touch’, there are for humans four different types of mechanoreceptors embedded in the skin which are responsible for the full range of touch-related sensations:

- Pacinian corpuscles (coarse touch, distinction soft/rough surfaces).

- Tactile corpuscles (light touch and moderate (10-50 Hz) vibration).

- Merkel cell nerve endings (deep static touch, 5-15 Hz vibrations).

- Bulbous corpuscles (slow response, e.g. skin stretching & slipping object).

This gradation of different sensor types shows the sheer complexity of restoring something like the sense of touch — ideally one would not only want to get these different types of sensory perceptions replicated, but also delivered to the somatosensory cortex in a way that allows it to fit within the existing processing pathways.

As noted in the press release by the University of Pittsburgh on the most recent results, the goal was to provide somatosensory feedback when there was contact between the robotic hand, to allow the subject to feel the moment of contact, in addition to confirming the action visually. As Nathan himself described it after the experiments, it felt more like pressure and a tingle instead of the natural sensation of ‘touch’ which he remembers from before the accident that caused his paralysis. Even so, he was able to use this new feedback and use it to improve his performance on standard tests compared to when no somatosensory feedback was provided.

A Testament To The Brain’s Adaptability

Although the sensory input experienced by Nathan’s brain was obviously not what it had originally been receiving prior to the permanent interruption years prior, it was nevertheless ‘good enough’ that it allowed Nathan to experience a marked quality of life improvement simply from experiencing a sensation roughly in the ‘hand’ area of his somatosensory cortex that could be linked to the motor cortex action of moving the robotic limb.

This corroborates with previous studies on e.g. the feeling of body ownership, as well as the role of the insular cortex in keeping track of one’s limbs and the ease with which an additional limb extension can be added, as with e.g. the ‘third thumb’ art project (which we covered previously) where a second thumb was added to a hand and which users learned to control in a fairly natural manner. Essentially it appears that one can subject the human brain to a lot of ‘unnatural’ situations, and it’ll find a way to adapt and make the best of things.

Based on the results so far, we could reasonably make the assumption that adding even simplistic touch sensors to prosthetic, brain-controlled limbs could make for a much appreciated boost in qualify of life for those who find themselves in the position of using these prosthetics on a daily basis. It might some day even form the basis for elective BCIs, for use with operating certain types of machinery and tools some day with a precision that goes beyond the manipulation of controls like joysticks and buttons.

Of course, none of that matters if we cannot solve the issue of biocompatibility, which is the reason that for Nathan his BCI may soon have to be removed, when the expected five year lifespan of the Utah array implants expires.

A Story Of Cyborgs

The field of cybernetics (from Greek κυβερνητική (kybernētikḗ), meaning “governance”) involves the exploration and definition of processes, both in societies as well as in biological systems. Where possible, these processes can be enhanced or repaired, which over the years has led to the fields of medical cybernetics and the closely related systems biology working on finding ways to restore lost functionality to the body, including such essentials as an artificial heart replacement and the cloning of organs using a patient’s own stem cells.

Neuroprosthetics such as those being prototyped by Nathan Copeland are cybernetic neural implants which seek to restore functionality that was lost due to disease or an accident. Combining cybernetics and biomedical engineering, the goal is to identify and solve any remaining fundamental biological and engineering questions that prevent the natural process from being restored.

In the case of artificial limbs like an arm and its hand, the number of remaining issues are broad. It’s hard to beat biological muscles and the nervous system innervation that not only connects these muscles to the motor cortex, but also the advanced mechanoreceptors with the somatosensory cortex with a level of detail that we cannot hope to approach yet.

In the case of replacing biological systems with artificial ones, this is commonly referred to as a ‘cyborg‘ (cybernetic organism), even though cybernetics itself has no preference for artificial or biological systems. Regardless, the essential issues encountered with the merging of biological and non-biological systems is at the interface layer: the biological systems tend to be very hostile to implanted devices, and will damage them over time.

Devil Is In The Signal-To-Noise Ratio

We have previously looked at start-up Neuralink’s claims about ‘revolutionary’ improvements to BCIs and bidirectional data exchange between the human brain and computer systems. Back in 2019 the conclusion was essentially that the most exciting thing that Neuralink had brought to the table was its alternative to the Utah array, with a three-dimensional electrode structure that added recordings from inside the brain instead of just the top layer. This allows theoretically access to a lot more of the data inside the cortices.

Even so, the data recorded by those electrodes still have to be made sense of. In the case of Nathan Copeland, the researchers made use of a residual sense of touch in his arms and hands to pin-point areas to target with somatosensory stimulation, along with residual muscle control in his shoulders. For patients where there is no residual functionality, the required calibration process would be far slower. In the case of figuring out which parts of the motor cortex map to something as intricate as the facial muscles and those involved in speech the process would be even more tedious.

The other complication is in getting the data in and out of the brain without a permanent socket on one’s skull, as is the case with Nathan. Much like a piercing, he has to keep it sterile and keep the skin from getting in the way. Although interesting in a Matrix kind of dystopian sci-fi fashion, constantly having a plug on one’s head and cables running to and from prosthetic limbs is probably less than ideal. Perhaps internal wiring could work here, aside from the obvious medical nightmare here, or perhaps a wireless transceiver as Neuralink has proposed.

In the end it appears that although science today offers a glimpse of a better future to people like Nathan, we’re still many decades away from a plug-and-play future of medical prosthetics.

Post a Comment