Death Of The Turing Test In An Age Of Successful AIs

IBM has come up with an automatic debating system called Project Debater that researches a topic, presents an argument, listens to a human rebuttal and formulates its own rebuttal. But does it pass the Turing test? Or does the Turing test matter anymore?

The Turing test was first introduced in 1950, often cited as year-one for AI research. It asks, “Can machines think?”. Today we’re more interested in machines that can intelligently make restaurant recommendations, drive our car along the tedious highway to and from work, or identify the surprising looking flower we just stumbled upon. These all fit the definition of AI as a machine that can perform a task normally requiring the intelligence of a human. Though as you’ll see below, Turing’s test wasn’t even for intelligence or even for thinking, but rather to determine a test subject’s sex.

The Imitation Game

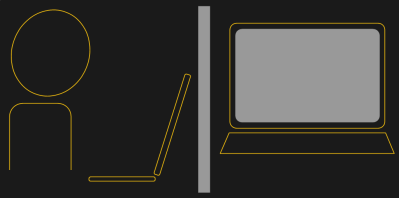

The Turing test as we know it today is to see if a machine can fool someone into thinking that it’s a human. It involves an interrogator and a machine with the machine hidden from the interrogator. The interrogator asks questions of the machine using only keyboard and screen. The purpose of the interrogator’s questions are to help him to decide if he’s talking to a machine or a human. If he can’t tell then the machine passes the Turing test.

Often the test is done with a number of interrogators and the measure of success is the percentage of interrogators who can’t tell. In one example, to give the machine an advantage, the test was to tell if it was a machine or a 13-year-old Ukrainian boy. The young age excused much of the strangeness in its conversation. It fooled 33% of the interrogators.

Naturally Turing didn’t call his test “the Turing test”. Instead he called it the imitation game, since the goal was to imitate a human. In Turing’s paper, he gives two versions of the test. The first involves three people, the interrogator, a man and a woman. The man and woman sit in a separate room from the interrogator and the communication at Turing’s time was ideally via teleprinter. The goal is for the interrogator to guess who is male and who is female. The man’s goal is to fool the interrogator into making the wrong decision and the woman’s is to help him make the right one.

The second test in Turing’s paper replaces the woman with a machine but the machine is now the deceiver and the man tries to help the interrogator make the right decision. The interrogator still tries to guess who is male and who is female.

But don’t let that goal fool you. The real purpose of the game was as a replacement for his question of “Can a machine think?”. If the game was successful then Turing figured that his question would have been answered. Today, we’re both more sophisticated about what constitutes “thinking” and “intelligence”, and we’re also content with the machine displaying intelligent behavior, whether or not it’s “thinking”. To unpack all this, let’s take IBM’s recent Project Debater under the microscope.

The Great Debater

IBM’s Project Debater is an example of what we’d call a composite AI as opposed to a narrow AI. An example of narrow AI would be to present an image to a neural network and the neural network would label objects in that image, a narrowly defined task. A composite AI, however, performs a more complex task requiring a number of steps, much more akin to a human brain.

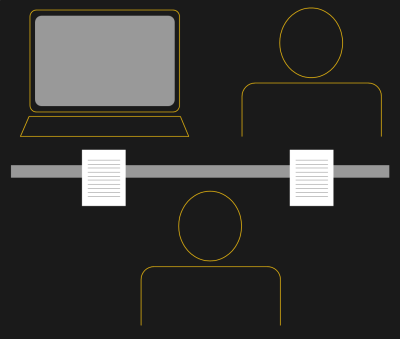

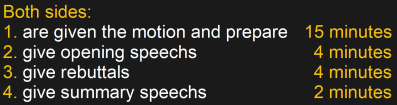

Project Debater is first given the motion to be argued. You can read the paper on IBM’s webpage for the details of what it does next but basically it spends 15 minutes researching and formulating a 4-minute opening speech supporting one side of the motion. It also converts the speech to natural language and delivers it to an audience. During those initial 15 minutes, it also compiles leads for the opposing argument and formulates responses. This is in preparation for its later rebuttal. It then listens to its opponents rebuttal, converting it to text using IBM’s own Watson speech-to-text. It analyzes the text and, in combination with the responses it had previously formulated, comes up with its own 4-minute rebuttal. It converts that to speech and ends with a summary 2-minute speech.

All of those steps, some of them considered narrow AI, add up to a composite AI. The whole is done with neural networks along with conventional data mining, processing, and analysis.

The following video is of a live debate between Project Debater and Harish Natarajan, world record holder for the number of debate competitions won. Judge for yourself how well it works.

Does Project Debater pass the Turing test? It didn’t take the formal test, however, you can judge for yourself by imagining reading a transcript of what Project Debater had to say. Could you tell whether it was produced by a machine or a human? If you could mistake it for a human then it may pass the Turing test. It also responds to the human debater’s argument, similar to answering questions in the Turing test.

Keep in mind though that Project Debater had 15 minutes to prepare for the opening speech and no numbers are given on how long it took to come up with the other speeches, so if time-to-answer is a factor then it may lose there. But does it matter?

Does The Turing Test Matter?

Does it matter if any of today’s AIs can pass the Turing test? That’s most often not the goal. Most AIs end up as marketed products, even the ones that don’t start out that way. After all, eventually someone has to pay for the research. As long as they do the job then it doesn’t matter.

Does it matter if any of today’s AIs can pass the Turing test? That’s most often not the goal. Most AIs end up as marketed products, even the ones that don’t start out that way. After all, eventually someone has to pay for the research. As long as they do the job then it doesn’t matter.

IBM’s goal for Project Debater is to produce persuasive arguments and make well informed decisions free of personal bias, a useful tool to sell to businesses and governments. Tesla’s goal for its AI is to drive vehicles. Chatbots abound for handling specific phone and online requests. All of them do something normally requiring the intelligence of a human with varying degrees of success. The test that matters then is whether or not they do their tasks well enough for people to pay for them.

Maybe asking if a machine can think, or even if it can pass for a human, isn’t really relevant. The ways we’re using them require only that they can complete their tasks. Sometimes this can require “human-like” behavior, but most often not. If we’re not using AI to trick people anyway, is the Turing test still relevant?

Post a Comment