AI Upscaling and the Future of Content Delivery

The rumor mill has recently been buzzing about Nintendo’s plans to introduce a new version of their extremely popular Switch console in time for the holidays. A faster CPU, more RAM, and an improved OLED display are all pretty much a given, as you’d expect for a mid-generation refresh. Those upgraded specifications will almost certainly come with an inflated price tag as well, but given the incredible demand for the current Switch, a $50 or even $100 bump is unlikely to dissuade many prospective buyers.

But according to a report from Bloomberg, the new Switch might have a bit more going on under the hood than you’d expect from the technologically conservative Nintendo. Their sources claim the new system will utilize an NVIDIA chipset capable of Deep Learning Super Sampling (DLSS), a feature which is currently only available on high-end GeForce RTX 20 and GeForce RTX 30 series GPUs. The technology, which has already been employed by several notable PC games over the last few years, uses machine learning to upscale rendered images in real-time. So rather than tasking the GPU with producing a native 4K image, the engine can render the game at a lower resolution and have DLSS make up the difference.

The implications of this technology, especially on computationally limited devices, is immense. For the Switch, which doubles as a battery powered handheld when removed from its dock, the use of DLSS could allow it to produce visuals similar to the far larger and more expensive Xbox and PlayStation systems it’s in competition with. If Nintendo and NVIDIA can prove DLSS to be viable on something as small as the Switch, we’ll likely see the technology come to future smartphones and tablets to make up for their relatively limited GPUs.

But why stop there? If artificial intelligence systems like DLSS can scale up a video game, it stands to reason the same techniques could be applied to other forms of content. Rather than saturating your Internet connection with a 16K video stream, will TVs of the future simply make the best of what they have using a machine learning algorithm trained on popular shows and movies?

How Low Can You Go?

Obviously, you don’t need machine learning to resize an image. You can take a standard resolution video and scale it up to high definition easily enough, and indeed, your TV or Blu-ray player is doing exactly that when you watch older content. But it doesn’t take a particularly keen eye to immediately tell the difference between a DVD that’s been blown up to fit an HD display and modern content actually produced at that resolution. Taking a 720 x 480 image and pushing it up to 1920 x 1080, or even 3840 x 2160 in the case of 4K, is going to lead to some pretty obvious image degradation.

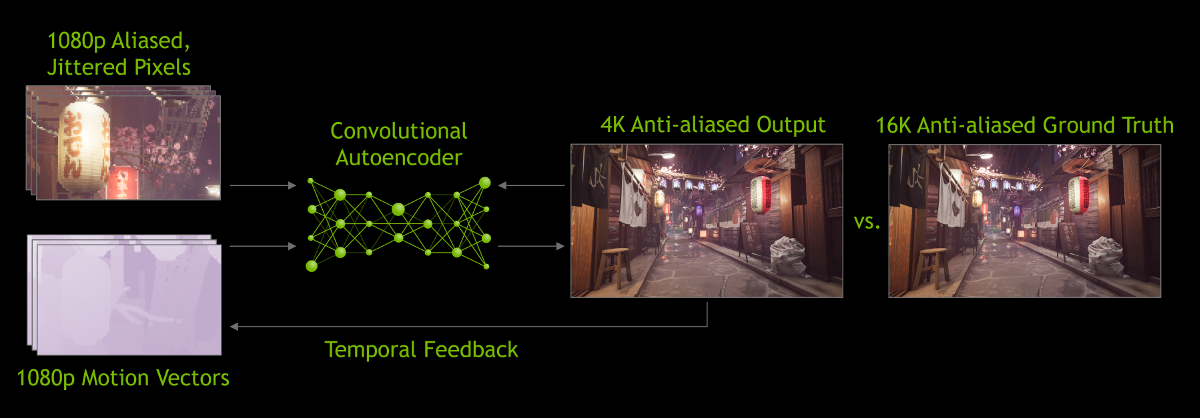

To address this fundamental issue, AI-enhanced scaling actually creates new visual data to fill in the gaps between the source and target resolutions. In the case of DLSS, NVIDIA trained their neural network by taking low and high resolution images of the same game and having their in-house supercomputer analyze the differences. To maximize the results, the high resolution images were rendered at a level of detail that would be computationally impractical or even impossible to achieve in real-time. Combined with motion vector data, the neural network was tasked with not only filling in the necessary visual information to make the low resolution image better approximate the idealistic target, but predict what the next frame of animation might look like.

While fewer than 50 PC games support the latest version of DLSS at the time of this writing, the results so far have been extremely promising. The technology will enable current computers to run newer and more complex games for longer, and for current titles, lead to substantially improved frames per second (FPS) rendering. In other words, if you have a computer powerful enough to run a game at 30 FPS in 1920 x 1080, the same computer could potentially reach 60 FPS if the game was rendered at 1280 x 720 and scaled up with DLSS.

There’s been plenty of opportunity to benchmark the real-world performance gains of DLSS on supported titles over the last year or two, and YouTube is filled with head-to-head comparisons that show what the technology is capable of. In a particularly extreme test, 2kliksphilip ran 2019’s Control and 2020’s Death Stranding at just 427 x 240 and used DLSS to scale it up to 1280 x 720. While the results weren’t perfect, both games ended up looking far better than they had any right to considering they were being rendered at a resolution we’d more likely associate with the Nintendo 64 than a modern gaming PC.

AI Enhanced Entertainment

While these may be early days, it seems pretty clear that machine learning systems like Deep Learning Super Sampling hold a lot of promise for gaming. But the idea isn’t limited to just video games. There’s also a big push towards using similar algorithms to enhance older films and television shows for which no higher resolution version exists. Both proprietary and open software is now available that leverages the computational power of modern GPUs to upscale still images as well as video.

Of the open source tools in this arena, the Video2X project is well known and under active development. This Python 3 framework makes use of the waifu2x and Anime4K upscalers, which as you might have gathered from their names, have been designed to work primarily with anime. The idea is that you could take an animated film or series that was only ever released in standard definition, and by running it through a neural network specifically trained on visually similar content, bring it up to 1080 or even 4K resolution.

Of the open source tools in this arena, the Video2X project is well known and under active development. This Python 3 framework makes use of the waifu2x and Anime4K upscalers, which as you might have gathered from their names, have been designed to work primarily with anime. The idea is that you could take an animated film or series that was only ever released in standard definition, and by running it through a neural network specifically trained on visually similar content, bring it up to 1080 or even 4K resolution.

While getting the software up and running can be somewhat fiddly given the different GPU acceleration frameworks available depending on your operating system and hardware platform, this is something that anyone with a relatively modern computer is capable of doing on their own. As an example, I’ve taken a 640 x 360 frame from Big Buck Bunny and scaled it up to 1920 x 1080 using default settings on the waifu2x upscaler backend in Video2X:

When compared to the native 1920 x 1080 image, we can see some subtle differences. The shading of the rabbit’s fur is not quite as nuanced, the eyes lack a certain luster, and most notably the grass has gone from individual blades to something that looks more like an oil painting. But would you have really noticed any of that if the two images weren’t side by side?

Some Assembly Required

In the previous example, AI was able to increase the resolution of an image by three times with negligible graphical artifacts. But what’s perhaps more impressive is that the file size of the 640 x 360 frame is only a fifth that of the original 1920 x 1080 frame. Extrapolating that difference to the length of a feature film, and it’s clear how technology could have a huge impact on the massive bandwidth and storage costs associated with streaming video.

Imagine a future where, instead of streaming an ultra-high resolution movie from the Internet, your device is instead given a video stream at 1/2 or even 1/3 of the target resolution, along with a neural network model that had been trained on that specific piece of content. Your AI-enabled player could then take this “dehydrated” video and scale it in real-time to whatever resolution was appropriate for your display. Rather than saturating your Internet connection, it would be a bit like how they delivered pizzas in Back to the Future II.

The only technical challenge standing in the way is the time it takes to perform this sort of upscaling: when running Video2X on even fairly high-end hardware, a rendering speed of 1 or 2 FPS is considered fast. It would take a huge bump in computational power to do real-time AI video scaling, but the progress NVIDIA has made with DLSS is certainly encouraging. Of course film buffs would argue that such a reproduction may not fit with the director’s intent, but when people are watching movies 30 minutes at a time on their phones while commuting to work, it’s safe to say that ship has already sailed.

Post a Comment