Leaked Internal Google Document Claims Open Source AI Will Outcompete Google and OpenAI

In the world of large language models (LLM), the focus has for the longest time been on proprietary technologies from companies such as OpenAI (GPT-3 & 4, ChatGPT, etc.) as well as increasingly everyone from Google to Meta and Microsoft. What’s remained underexposed in this whole discussion about which LLM will do more things better are the efforts by hobbyists, unaffiliated researchers and everyone else you may find in Open Source LLM projects. According to a leaked document from a researcher at Google (anonymous, but apparently verified), Google is very worried that Open Source LLMs will wipe the floor with both Google’s and OpenAI’s efforts.

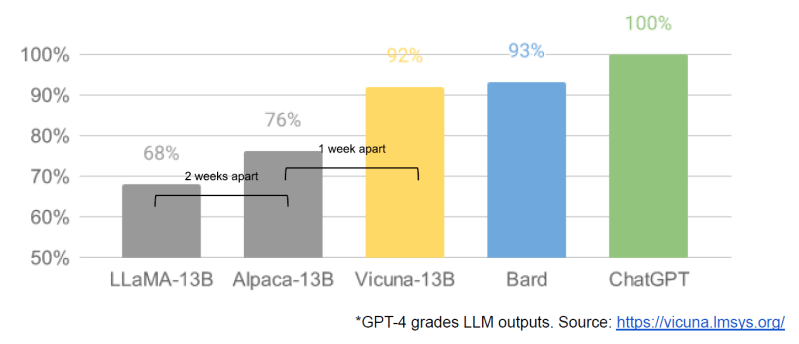

According to the document, after the open source community got their hands on the leaked LLaMA foundation model, motivated and highly knowledgeable individuals set to work to take a fairly basic model to new levels where it could begin to compete with the offerings by OpenAI and Google. Major innovations are the scaling issues, allowing these LLMs to work on far less powerful systems (like a laptop or even smartphone).

An important factor here is Low-Rank adaptation (LoRa), which massively cuts down the effort and resources required to train a model. Ultimately, as this document phrases it, Google and in extension OpenAI do not have a ‘secret sauce’ that makes their approaches better than anything the wider community can come up with. Noted is also that essentially Meta has won out here by having their LLM leak, as it has meant that the OSS community has been improving on the Meta foundations, allowing Meta to benefit from those improvements in their products.

The dire prediction is thus that in the end the proprietary LLMs by Google, OpenAI and others will cease to be relevant, as the open source community will have steamrolled them into fine, digital dust. Whether this will indeed work out this way remains to be seen, but things are not looking up for proprietary LLMs.

(Thanks to [Mike Szczys] for the tip)

Post a Comment