PinePhone Malware Surprises Users, Raises Questions

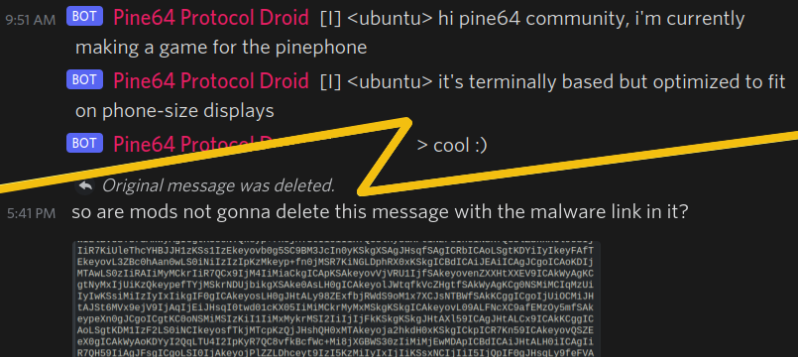

On December 5th, someone by the IRC nickname of [ubuntu] joined the Pine64 Discord’s #pinephone channel through an IRC bridge. In the spirit of December gift-giving traditions, they have presented their fellow PinePhone users with an offering – a “Snake” game. What [ubuntu] supposedly designed had the potential to become a stock, out-of-the-box-installed application with a small but dedicated community of fans, modders and speedrunners.

Unfortunately, that would not be the alternate universe we live in, and all was not well with the package being shared along with a cheerful “hei gaiz I make snake gaem here is link www2-pinephnoe-games-com-tz replace dash with dot kthxbai” announcement. Shockingly, it was a trojan! Beneath layers of Base64 and Bashfuscator we’d encounter shell code that could be in the “example usage” section of a modern-day thesaurus entry for the word “yeet“.

The malicious part of the code is not sophisticated – apart from obfuscation, the most complex thing about it is that it’s Bash, a language with unreadability baked in. Due to the root privileges given when installing the package, the find-based modern-day equivalent of rm -rf /* has no trouble doing its dirty work of wiping the filesystem clean, running a shred on every file beforehand if available to thwart data recovery. As for the “wipe the cellular modem’s firmware” bonus part, it exploits the CVE-2021-31698. All of that would happen on next Wednesday at 20:00, with scheduling done by a systemd-backed cronjob.

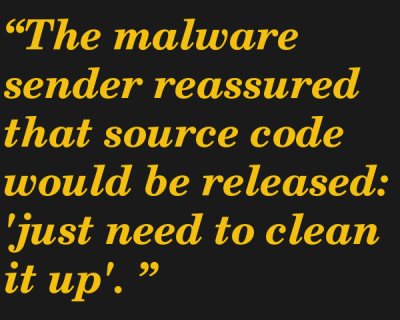

[ubuntu] didn’t share sources, just the binaries, packaged for easy installation on Arch Linux. One of the prominent PinePhone community members installed that binary and enjoyed the “game” part of it, asking about plans to make it open-source – receiving reassurance from [ubuntu] that the sources would be released eventually, “just need to clean it up”. Some weren’t so sure, arguing that people shouldn’t sudo install-this random games without a source code repo link. Folks were on low alert, and there might’ve been up to about a dozen installs before a cautious and savvy member untarred the package and alerted people to suspicious base64 in the .INSTALL script, about half a day later.

How Do We Interpret This?

This was a small-scale yet high-effort destructive attack on PinePhone users, targeting the ones using Arch specifically, by the way. The malware sender announced their “game development efforts” before publishing, stayed in the channel doing a bit of small talk and Q&A, and otherwise was not quickly distinguishable from an average developer coming to bless a prospective platform with their first app. Most of all, the Snake game was very much real – it’s not clear whether the code might’ve been stolen from some open-source project, but you wouldn’t distinguish it from a non-malicious Snake game. It’s curious that the package doesn’t seem to be sending private data to any servers (or encrypt files, or force you to watch ads akin to modern mobile games) – it easily could, but it doesn’t.

With the amount of work being done on the PinePhone cellular modem reverse-engineering, it’s peculiar that the malware takes advantage of the CVEs discovered alongside that effort. You wouldn’t expect a typical phone virus to pull off a cellular modem brick trick, given the fragmentation of Android world and the obfuscation of Apple world. Funnily enough, the community-developed open-source firmware for the Quectel cellular modem is immune to the bug being exploited and is overall more fully-featured, but Pine64 is required to ship the exploitable proprietary firmware by default for regulatory compliance reasons – the consequences for stepping out of line on that are drastic enough, according to a Pine64 source.

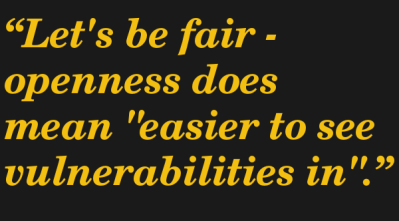

Questions spring to mind. Is PinePhone a safe platform? My take is – “yes” when compared to everything else, “no” if you expect to be unconditionally safe when using it. As it stands, it’s a platform that explicitly requires your understanding of what you’re directing it to do.

With more OS distributions available than any other modern phone could boast about being able to support, you can use something like Ubuntu Touch for a smooth experience. You are given overall more power to keep yourself safe when using a PinePhone. People who understand the potential of this power are the kind of people who contribute to the PinePhone project, which is why it’s sad that they specifically were targeted in this event.

Other platforms solve such problems in different ways, where only part of the solution is actual software and architectural work done by the platform, and another is by training the users. For instance, you’re not expected to use a third-party appstore (or firmware, or charger, or grip method) on your iPhone, and Android has developer mode checkboxes you can reach if you recreate the third movement of “Flight of the Bumblebee” with your finger in the settings screen. The Linux ecosystem way is to rely on the kernel to provide reliable low-level security primitives, but the responsibility is on the distributions to incorporate software and configurations that make use of these primitives.

I’d argue that mobile Linux distributions ought to define and maintain their position on the “security” scale, too, elaborating on the measures they take when it comes to third-party apps. Half a year ago, when I was preparing a summary on different OSes available for PinePhone and their stances on app security, it took me way more time than I’d feel comfortable having someone spend on a task of such significance.

What Are Our Options?

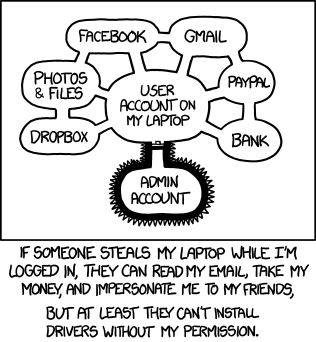

The gist of advice given out to newcomers is “don’t install random software you can’t trust”. While this is good advice on its own, you’d be right to point out – a game shouldn’t be able to wipe your system, and “get better users” generally isn’t a viable strategy. Any security strategist in denial about inherent human fallibility is not going to make it in the modern world, so let’s see what we can do beside the usual “educate users” part. As usual, there’s an XKCD to start off with.

Even being able to write to an arbitrary user-owned file on a Linux system is “game over”. Say, in $HOME/.bashrc, you can alias sudo to stdin-recording-app sudo and grab the user’s password next time they run sudo in the terminal. .bashrc isn’t the only one user-writeable file getting executed regularly, either. While sandboxing solutions are being developed to solve these kind of problems, the work is slow and the aspects of it are non-trivial, generally best described as “dynamic and complex whitelisting”.

A piece of commonly handed out advice is “if you can’t read the code and understand what it does, don’t run it”, presumably, supposed to apply to packages and codebases longer than a weekend project. Ironically, this puts Linux at an unwarranted disadvantage to closed-source systems. The “share an .exe” way of distributing applications is older than I am personally, and it still is an accepted method of sharing software that someone wrote for Windows, with UAC having become yet another reflexive clickthrough box. Again, putting more of a security burden on Linux users’ shoulders is easy but foolish.

Would sharing the source code even help in the malware situation? No! In fact, attaching a link to a source code repo would help [ubuntu] make the malware distribution more plausible. When you publish a package, even on supposedly reputable platforms, there’s rarely any checks on whether the code inside the package you upload matches the code in your repo.

That’s true for a lot of places – GitHub and GitLab releases, DockerHub, NPM, RubyGems, browser extension stores, PyPi, and even some supposedly safe Linux repositories, like F-droid, are vulnerable. Providing sourcecode along a malicious package adds legitimacy, and takes away incentives for skilled people to check the binary in the first place – hey, the code’s there to see already! If [ubuntu] did just that, perhaps we’d be talking about this incident a few days later and in a more somber tone. Supply-chain attacks are the new hotness in 2020 and 2021.

Plenty of security systems we have set up are trust-based. Package signing is the most prominent one, where a cryptographic signature of a person responsible for maintaining the package is used to establish “person X vouches for this package’s harmlessness”. HTTPS is another trust-based technology we use daily, though, really, you’re trusting your browser’s or OS’s keystore maintainer way more than any particular key owner.

When enforced to the extent that it actually makes us more secure, trust-based tech puts a burden on new developers who don’t have reasonably polished social and cryptographic prowess. However, when often already met with lacking documentation, incomplete APIs and untested libraries, should we really be increasing the burden any further? Maybe that’s not so bad.

The trust-based signing tech I mention often is applied to OS images you typically download to bootstrap your PC (or phone!) with a Linux install, but it’s not yet popular on PinePhone – for instance, Arch Linux images for PinePhone don’t have such signatures, which I was disappointed by, since most major distributions for the PC provide these and I expected the Linux phone space to be no different, and not having signatures can be disastrous. Quite a few security-related features like this are there for the taking, but aren’t being used because they require non-trivial effort to fit into a project’s infrastructure if it was not designed with security in mind from the beginning, or create an additional burden on the developers.

What Do We Really Need?

The PinePhone community has implemented some new rules, some channeling into the “automation” territory. This will possibly help a specific kind of problem to be less impactful in the future – though I’d argue that institutional memory should play a larger part in this. Beware of Greeks bearing gifts… until they learn how to work around your Discord bot’s heuristics? I already have, for instance. This is a monumental topic with roots beyond the Great PinePhone Snake Malware of 2021, and this article isn’t even about that as much as it’s about helping you understand what’s up with important aspects of Linux security, or maybe even the security of all open source software.

For me, this malware strikes the notes of “inevitable” and “course adjustment” and “growing pains”. Discussions about trust and software take place in every community that gets large enough.

We need the acknowledgment that Linux malware is possible and may eventually become widespread, and a healthy discussion about how to stop it is crucial. Linux still has effectively no malware, but the day we can no longer state so is approaching us.

I’m unsure on the exact course adjustment we need. Understanding the system goes a long way, but the security measures we expect can’t exclude power users and beginner developers. Technically, whether it’s containerization, sandboxing, trust-based infrastructure, or memory-safe languages, we need to know what we need before we know what to ask for.

I would like to thank [Lukasz] of Pine64 community and [Hacker Fantastic] for help on the PinePhone situation fact-checks.

Post a Comment