Deep Learning Enables Intuitive Prosthetic Control

Prosthetic limbs have been slow to evolve from simple motionless replicas of human body parts to moving, active devices. A major part of this is that controlling the many joints of a prosthetic is no easy task. However, researchers have worked to simplify this task, by capturing nerve signals and allowing deep learning routines to figure the rest out.

Reported in a pre-published paper, researchers used implanted electrodes to capture signals from the median and ulnar nerves in the forearm of Shawn Findley, who had lost a hand to a machine shop accident 17 years prior. An AI decoder was then trained to decipher signals from the electrodes using an NVIDIA Titan X GPU.

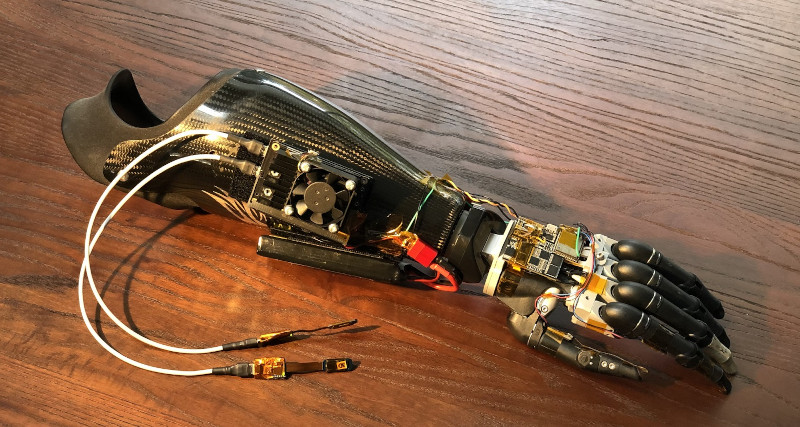

With this done, the decoder model could then be run on a significantly more lightweight system consisting of an NVIDIA Jetson Nano, which is small enough to mount on a prosthetic itself. This allowed Findley to control a prosthetic hand by thought, without needing to be attached to any external equipment. The system also allowed for intuitive control of Far Cry 5, which sounds like a fun time as well.

The research is exciting, and yet another step towards full-function prosthetics becoming a reality. The key to the technology is that models can be trained on powerful hardware, but run on much lower-end single-board computers, avoiding the need for prosthetic users to carry around bulky hardware to make the nerve interface work. If it can be combined with a non-invasive nerve interface, expect this technology to explode in use around the world.

[Thanks to Brian Caulfield for the tip!]

Post a Comment