History of Closed Captions: The Analog Era

Closed captioning on television and subtitles on DVD, Blu-ray, and streaming media are taken for granted today. But it wasn’t always so. In fact, it was quite a struggle for captioning to become commonplace. Back in the early 2000s, I unexpectedly found myself involved in a variety of closed captioning projects, both designing hardware and consulting with engineering teams at various consumer electronics manufacturers. I may have been the last engineer working with analog captioning as everyone else moved on to digital.

But before digging in, there is a lot of confusing and imprecise language floating around on this topic. Let’s establish some definitions. I often use the word captioning which encompasses both closed captions and subtitles:

- Closed Captions: Transmitted in a non-visible manner as textual data. Usually they can be enabled or disabled by the user. In the NTSC system, it’s often referred to as Line 21, since it was transmitted on video line number 21 in the Vertical Blanking Interval (VBI).

- Subtitles: Rendered in a graphical format and overlaid onto the video / film. Usually they cannot be turned off. Also called open or hard captions.

The text contained in captions generally falls into one of three categories. Pure dialogue (nothing more) is often the style of captioning you see in subtitles on a DVD or Blu-ray. Ordinary captioning includes the dialogue, but with the addition of occasional cues for music or a non-visible event (a doorbell ringing, for example). Finally, “Subtitles for the Deaf or Hard-of-hearing” (SDH) is a more verbose style that adds even more descriptive information about the program, including the speaker’s name, off-camera events, etc.

Roughly speaking, closed captions are targeting the deaf and hard of hearing audience. Subtitles are targeting an audience who can hear the program but want to view the dialogue for some reason, like understanding a foreign movie or learning a new language.

Titles Before Talkies

Subtitles are as old as movies themselves. Since the first movies didn’t have sound, they used what are now called intertitles to convey dialogue and expository information. These were full-screens of text inserted (not overlaid) into the film at appropriate places. Some attempts were made at overlaying the subtitles which used a second projector and glass slides of text which were manually switched out by the projectionist, hopefully in synchronization with the dialogue. One forward-thinking but overlooked inventor experimented with comic book dialogue balloons which appeared next to the actor who was speaking. These techniques also made distribution of a film to other countries a relatively painless affair — only the intertitles had to be translated.

This changed with the arrival of “talkies” in the late 1920s. Now there was no need for intertitles since you could hear the dialogue. But translations for foreign audiences were still desired, and various time-consuming optical and chemical processes were used to generate the kind of subtitles we think of today. But there were no subtitles for local audiences — no doubt to the irritation of deaf and hard-of-hearing patrons who had been equally enjoying the movies alongside hearing persons for years.

Television

As television grew in popularity, there were some attempts at optical subtitles in the early years, but these were not wildly successful nor widely adopted. In the United States, there was interest brewing in closed captioning systems by the end of the 1960s. In April 1970, the FCC received a petition asking that emergency alerts be accompanied by text for deaf viewers. This request came at a perfect point in time when the technology was ready, and the various parties were interested and prepared to take on the challenge.

It was at this time that deaf bureaucrat Malcolm Norwood from the Department of Education (then HEW) enters the story. He had been working in the Captioned Films for the Deaf department since 1960. Today he is often called the Father of Closed Captioning within the community. He was the perfect leader to champion this new technology and he accepted the challenge.

The FCC agreed in principle with the issues raised, and in response issued Public Notice 70-1328 in December 1970. Malcolm and the DOE brought together a team in 1971 which included the National Bureau of Standards, the National Association of Broadcasters, ABC, and PBS. They held a conference in Nashville (PDF) in December of 1971, which we can say was the birthplace of closed captioning.

It turns out that the technical implementation of broadcasting captions built on existing work. Over at the the National Bureau of Standards, engineer Dave Howe had been developing a system called TvTime to distribute accurate time signals over the air. This system sent a code over a video line in the VBI, using a method which eventually morphed into the CC standard. They had been testing the system with ABC, PBS, and NBC. ABC had even begun using this system to send text messages between affiliate stations.

Another system presented at the conference by HRB-Singer altered the vertical scanning of the receiver so that additional VBI lines were visible, and transmitted the caption text digitally, but visually, in those newly-exposed lines. This caused some concern among the TV set manufacturers, and thankfully the NBS system eventually won out.

After a few promising demonstrations, in 1973 PBS station WETA in the District of Columbia was authorized to broadcast closed captioned signals in order to further develop and refine the system. These efforts were successful, and in 1976 the FCC formally reserved line 21 for closed captions.

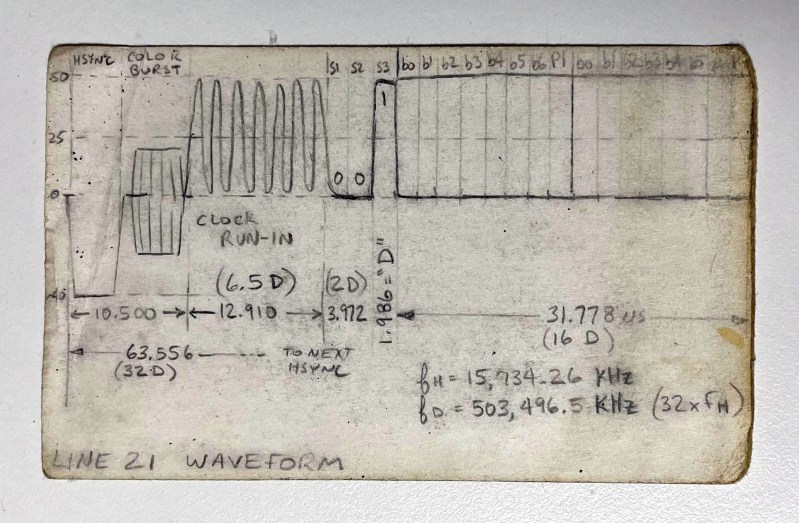

Adapting Broadcasts for Line 21

In its final form, the signal on line 21 had a few cycles of clock run-in to lock the decoder’s data recovery oscillator, followed by a 3-bit start pattern, and finally two parity-protected characters of text. The text encoding is almost always ASCII, with a few exceptions and special symbols considered necessary for the task. Text was always transmitted as pair of characters, and has traditionally been sent in all capital letters. Control codes are also byte pairs, and they perform functions like positioning the cursor, switching captioning services, changing colors, etc. Because control codes were so crucial to the proper display of text, parity protection wasn’t enough — they were usually transmitted twice, the duplicate control code pair being ignored if the first pair was error-free.

| Summary of Line 21 data | |

|---|---|

| Basic Rate | 503.496 kBd (32 x Horiz freq) |

| Grouping | 2 each 7-bit + parity bit characters / video line |

| Encoding | ASCII, with some modifications |

| Services | odd fields: CC1/CC2, T1/T2 |

| even fields: CC3/CC4, T3,T4, XDS | |

| Specification | EIA-608, 47 CFR 15.119, TeleCaption II |

Today we are accustomed to near-perfect video and audio programming, thanks to digital transmissions and wired/optical networks. Adding a few extra bytes into an existing protocol packet would barely give us pause. But back then, other factors had to be considered. The resulting CC standard was fine-tuned during lengthy and laborious field tests. The captioned video signal had to be robust when transmitted over the air. Engineers had to address and solve problems like signal strength degradation in fringe reception areas and multipath in dense urban areas.

As for the captioning methods, there were a few different types available. By far the most common styles were POP-ON and ROLL-UP. In POP-ON captioning, the receiver accumulated the incoming text in a buffer until receipt of a “flip-memory” control code, whereupon the entire caption would immediately appear on-screen simultaneously with the spoken dialogue. This style was typically used with prerecorded, scripted material such as movies and dramas. On the other hand, with ROLL-ON captioning, as its name implies, the text physically rolled-up from the bottom of the screen line-by-line. It was used for live broadcasts such as news programs and sporting events. The text naturally must be delayed from the audio due to the nature of the live speech transcription process.

The Brits Did it Differently, and Implemented Teletext in the Process

Across the pond, broadcast engineers at the BBC approached the issue from a different angle. Their managers asked if there was any way to use the transmitters to send data, since they were otherwise idle for one quarter of each day. Therefore they worked on maximizing the amount of data which could be transmitted.

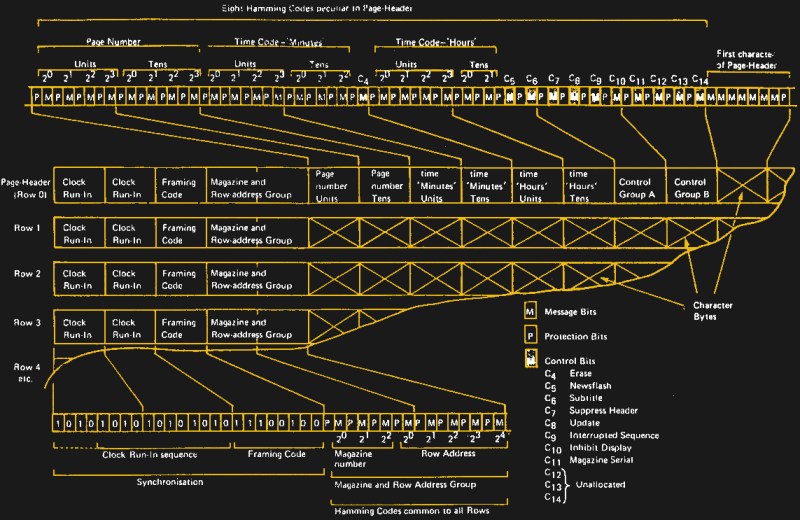

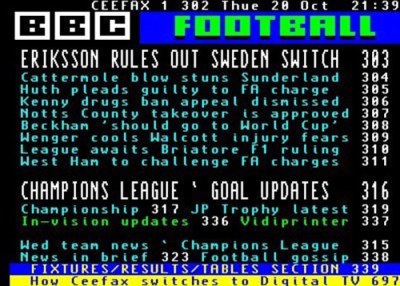

The initial service worked like a FAX machine by scanning, transmitting, and printing a newspaper page. Eventually, the BBC adopted an all-digital approach called CEEFAX developed by engineer John Adams of Philips. Simultaneously, a competing and incompatible service called ORACLE was begun by other broadcasters. In 1974, everyone finally settled on a merged standard called World System Teletext (WST) adopted as CCIR 653. Broadcasters in North America adopted a slight variant of WST called the North American Broadcast Teletext Specification (NABTS). Being a higher data rate than CC, teletext is less forgiving of transmission errors. It employs a couple of different Hamming codes to protect and optionally recover from errors in key data fields. It is quite a complex format to decode compared to line 21.

As for the format, teletext services broadcast three-digit pages of text and block graphical data — conceptually an electronic magazine. Categories of content were grouped by pages:

- 100s – News

- 200s – Business News

- 300s – Sport

- 400s – Weather and Travel

- 500s – Entertainment

- 600s – TV and Radio Listings

These text in these magazine pages are an integral part of the packet structure. For example, the text of line 4 in page 203 belongs in a specific packet for that page/line. Since the broadcaster is continuously transmitting all magazines and their pages, it may take a few seconds for the page you request to appear on-screen. NABTS takes a more free-form approach. The data can almost be considered a serial stream of text, like a connection of a terminal to a computer. If you need a new line of text, you send a CR/LF pair.

| Summary of Teletext Data | |

|---|---|

| Basic Rate | 6.938 MBd |

| Grouping | 360 bits/line, 40 available text characters |

| Encoding | Similar to Extended ASCII, with code pages |

| Services | Multiple page magazines, 40×24 chars each page |

| Specifications | Europe: WST ITU-R BT.653 (formerly CCIR 653) |

| North America: NABTS EIA-516 |

The Hacks That Made It All Work

Most of my designs were for use in North America, but I needed to learn about European teletext for a few candidate projects. In Europe, page 888 of the teletext system was designated to carry closed captioning text. This page has a transparent background and the receiver overlays it onto the video. The visual result was practically the same as in North America. But it posed some problems regarding media like VHS tapes.

The teletext signal couldn’t be recorded or played back on your typical home VHS recorder. To solve this, many tapes were made using an adaptation of the North American line 21 system, but applied to the PAL video format. This method was variously called line 22 or line 25 (the confusion being that PAL line #1 is different place than NTSC line #1), but was basically the same. A manufacturer who has a CC decoder in their NTSC product can easily adapt it to work in PAL countries.

How did I get PAL VHS tapes? I asked an engineer colleague at Philips Southampton if he could send me some sample tapes for testing. His wife bought some used from a local rental store and sent them to me. This was before the days of PayPal, so I sent her an international money order for $60. This covered the price of the tapes and shipping, plus a few extra dollars “tip” for her trouble. Some weeks later, I got an email from him saying that “you Americans sure give generous tips”. His wife had received my money order for $600, not $60! It took many months, but eventually the post office caught their mistake and she returned the overage.

In South Korea, a colleague was involved in the captioning industry back in the late 1990s. He was asked to participate on a government panel considering the nationwide adoption of closed captioning. The final result was comical — instead of CC, the committee decided to provided extremely loud external TV speakers free-of-charge to people with hearing difficulties. Fortunately, the conventional form of closed captioning has since been adopted with the advent of digital television broadcasting.

Designing on the Trailing Edge

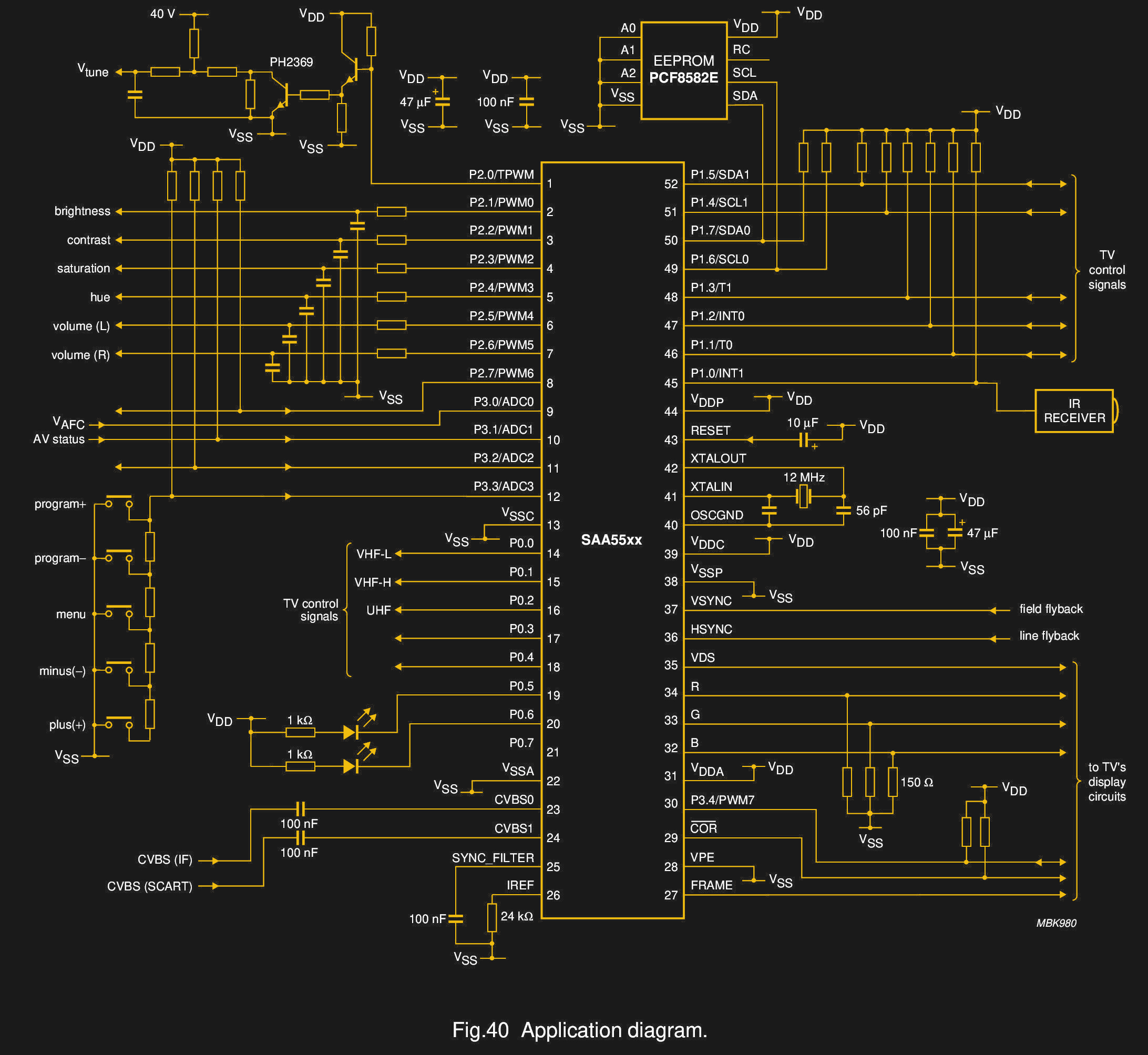

By the year 2000, almost all televisions had CC decoders built-in. As a result, there were a variety of ICs available to extract and process the line 21 signal. One example was from Philips Semiconductor (which became NXP and is now Freescale). As a key developer of teletext technology and a major chip supplier to the television industry, they offered a wide variety of CC and teletext processors. I developed several designs based on a chip from their Painter family of TV controllers. These were 8051-based microcontrollers with all the extras needed for teletext, closed captions, and user menus. They had VBI data slicers, character generators and ROM fonts, all integrated onto one die.

I still remember discovering the Painter chip buried pages deep in an internet search one day. When I couldn’t find any detailed information, I called the local rep and was told, “You aren’t supposed to even know about this part number — it’s a secret!”. Eventually the business logistics were resolved and I was allowed to use the chip. That was the only masked-ROM chip I ever made. I can still feel the rumbling in my stomach on the day I delivered the hex file to the local Philips office. The rep and I were hunched over the computer as we double- and triple-checked each entry on their internal ordering system. Once we pressed SEND, the bits were irrevocably transmitted to the factory and permanently burned into many thousands of chips. Even though we had thoroughly tested and proven the firmware in the lab, it was nevertheless a stressful day.

As I developed several other designs, it became clear that these special purpose chips should be avoided if any reasonable longevity was needed. The Painter chips were being phased out, several other options were disappearing as well. The writing was on the wall — digital broadcasting was here to stay, and the chip manufacturers were no longer making or supporting analog CC chips. I decided that future CC projects had to be done using general purpose ICs. I plan to delve into that in a future article along with unexpected applications of CC technology, the process of making captions, and how captioning made (or didn’t make) the transition to digital broadcasting and media.

Post a Comment