Science Officer…Scan for Elephants!

If you watch many espionage or terrorism movies set in the present day, there’s usually a scene where some government employee enhances a satellite image to show a clear picture of the main villain’s face. Do modern spy satellites have that kind of resolution? We don’t know, and if we did we couldn’t tell you anyway. But we do know that even with unclassified resolution, scientists are using satellite imagery and machine learning to count things like elephant populations.

When you think about it, it is a hard problem to count wildlife populations in their habitat. First, if you go in person you disturb the target animals. Even a drone is probably going to upset timid wildlife. Then there is the problem with trying to cover a large area and figuring out if the elephant you see today is the same one as one you saw yesterday. If you guess wrong you will either undercount or overcount.

The Oxford scientists counting elephants used the Worldview-3 satellite. It collects up to 680,000 square kilometers every day. You aren’t disturbing any of the observed creatures, and since each shot covers a huge swath of territory, your problem of double counting all but vanishes.

Not Unique

Apparently, counting animals from space is nothing new. Brute force, you get a grad student to count from a picture. But automated methods work in certain circumstances. Everything from whales to penguins has been subject to counting from orbit, but typically using water or ice as a background.

There have even been efforts to deduce animal populations from secondary data. For example, penguin counts can be estimated by the stains they leave on the ice. Yeah, those stains.

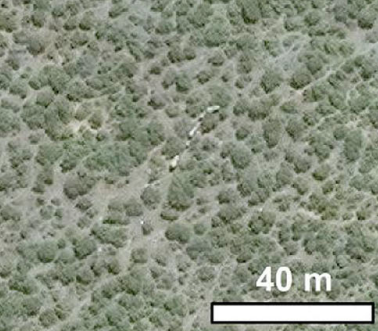

However, when counting the number of elephants at the Addo Elephant National Park in South Africa, there was no clear background. The grounds are forested and it frequently rains. The other challenge is that the elephants don’t always look the same. For example, they cover themselves in mud to cool down. Can a machine learn to recognize distinct elephants from high-resolution space photos?

How High?

The Worldview satellites have the highest resolution currently available to commercial users. The resolution is down to 31 cm. For Americans, that’s enough to pick out something about 1 foot long. That may not sound too impressive until you realize the satellite is about 383 miles above the Earth’s surface. That’s roughly like taking a picture from New York City and seeing things in Newport News, Virginia.

The researchers didn’t specifically task the satellite to look at the park. Instead, they pulled historical images from passes over the park. You can find out what data the satellite has, although you might not get the best or most current data without a subscription. But even the data you can get is pretty impressive.

According to the paper, the archive images they used cost $17.50 per square kilometer. Asking for fresh images pushes the price to $27.50 and you had to buy at least 100 square kilometers, so satellite data isn’t cheap.

Training

Of course, a necessary part of machine learning is training. A test dataset had 164 elephants over seven different satellite images. Humans did the counting to provide the supposed correct answer for training. Using a scoring algorithm, humans averaged about 78% and the machine learning algorithm averaged about 75% — not much difference. Just like humans, the algorithms were better at some situations than others and could sometimes hit 80% for certain kinds of matches.

Free Data

Want to experiment with your own eye in the sky? Not all satellite data costs money, although the resolutions may not suit you. Obviously, Google Earth and Maps can show you some satellite images. USGS also has about 40 years worth of data online and NASA and NOAA have quite a bit, too, including NASA’s high-resolution Worldview. Landviewer gives you some free images, although you’ll have to pay for the highest resolution data. ESA runs Copernicus which has several types of imagery from the Sentinel satellites, and you can get Sentinal data from EO Browser or Sentinel Playground, as well. If you don’t mind Portuguese, the Brazilians have a nice portal for images of the Southern hemisphere. JAXA — the Japanese analog to NASA — has their own site with 30 meter resolution data, too. Then there’s one from the Indian equivalent, ISRO.

If you don’t want to log in, [Vincent Sarago’s] Remote Pixel site lets you access data from Landsat 8, Sentinel-2, and CBERS-4 with no registration. There are others too: UNAVCO, UoM, Zoom, and VITO. Of course, some of these images are fairly low-resolution (even as high as 1 km/pixel), so depending on what you want to do with the data, you may have to look to paid sources.

There’s a wide array of resolutions as well as the type of data, like visible light, IR, or radar. However, it all beats the state of the art in 1946, when the V-2 photo from 65 miles up was taken. Things have come a long way.

Sky High

We imagine that these same techniques would work with airborne photography as you might get from a drone or even a camera on a pole. That might be more cost-effective than buying satellite images. It made us wonder what other computer vision projects have yet to burst on the scene.

Maybe our 3D printers will one day compare their output in real time to the input model to detect printing problems. That would be the ultimate “out of filament” sensor and could also detect loss of bed adhesion and other abnormalities.

Electronic data from thermal imaging or electron beam stroboscopy could deliver pseudo images as input to an algorithm like this one. Imagine training a computer about what a good board looks like and then having it identify bad boards.

Of course, you can always grab your own satellite images. We’ve seen that done many times.

Post a Comment