Amazon Echo Gets Open Source Brain Transplant

There’s little debate that Amazon’s Alexa ecosystem makes it easy to add voice control to your smart home, but not everyone is thrilled with how it works. The fact that all of your commands are bounced off of Amazon’s servers instead of staying internal to the network is an absolute no-go for the more privacy minded among us, and honestly, it’s hard to blame them. The whole thing is pretty creepy when you think about it.

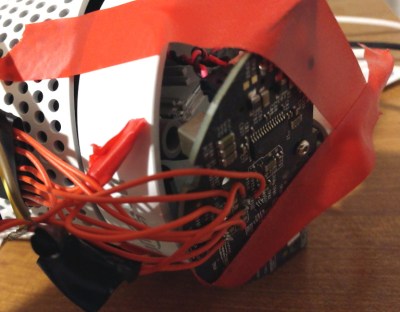

Which is precisely why [André Hentschel] decided to look into replacing the firmware on his Amazon Echo with an open source alternative. The Linux-powered first generation Echo had been rooted years before thanks to the diagnostic port on the bottom of the device, and there were even a few firmware images floating around out there that he could poke around in. In theory, all he had to do was remove anything that called back to the Amazon servers and replace the proprietary bits with comparable free software libraries and tools.

Of course, it ended up being a little trickier than that. The original Echo is running on a 2.6.x series Linux kernel, which even for a device released in 2014 is painfully outdated. With its similarly archaic version of glibc, newer Linux software would refuse to run. [André] found that building an up-to-date filesystem image for the Echo wasn’t a problem, but getting the niche device’s hardware working on a more modern kernel was another story.

He eventually got the microphone array working, but not the onboard digital signal processor (DSP). Without the DSP, the age of the Echo’s hardware really started to show, and it was clear the seven year old smart speaker would need some help to get the job done.

The solution [André] came up with is not unlike how the device worked originally: the Echo performs wake word detection locally, but then offloads the actual speech processing to a more powerful computer. Except in this case, the other computer is on the same network and not hidden away in Amazon’s cloud. The Porcupine project provides the wake word detection, speech samples are broken down into actionable intents with voice2json, and the responses are delivered by the venerable eSpeak speech synthesizer.

As you can see in the video below the overall experience is pretty similar to stock, complete with fancy LED ring action. In fact, since Porcupine allows for multiple wake words, you could even argue that the usability has been improved. While [André] says adding support for Mycroft would be a logical expansion, his immediate goal is to get everything documented and available on the project’s GitLab repository so others can start experimenting for themselves.

Post a Comment