This Week in Security: OpenWRT, Favicons, and Steganographia

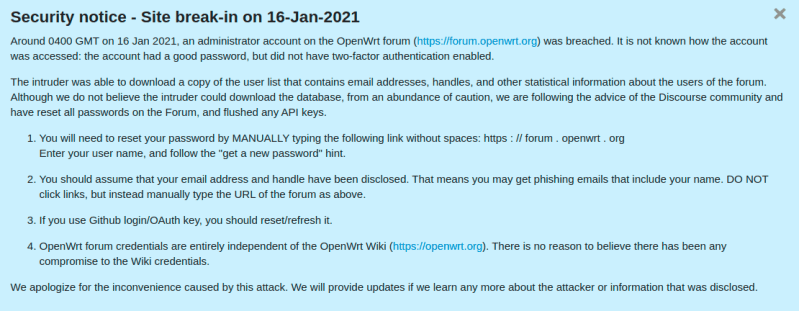

OpenWRT is one of my absolute favorite projects, but it’s had a rough week. First off, the official OpenWRT forums is carrying a notice that one of the administrator accounts was accessed, and the userlist was downloaded by an unknown malicious actor. That list is known to include email addresses and usernames. It does not appear that password hashes were exposed, but just to be sure, a password expiration has been triggered for all users.

The second OpenWRT problem is a set of recently discovered vulnerabilities in Dnsmasq, a package installed by default in OpenWRT images. Of those vulnerabilities, four are buffer overflows, and three are weaknesses in how DNS responses are checked — potentially allowing cache poisoning. These seven vulnerabilities are collectively known as DNSpooq (Whitepaper PDF).

Favicon Fingerprints

One of the frustrating-yet-impressive areas of research is browser fingerprinting. You may not have an account, and may clear your cookies, but if an advertiser wants to track you badly enough, there have been a stream of techniques to make it possible. A new technique was added to that list, Favicon caching (PDF). The big problem here is that the favicon cache is generally not sandboxed even in incognito mode (though thankfully not *saved* in incognito mode), as well as not cleared with the rest of browser data. To understand why that’s a problem, ask a simple question. How hard is it to determine if a browser has a cached copy of a favicon?

The exact scheme the authors suggest is to use multiple domains with discrete favicons, each one representing a single bit. Each user can then be assigned a different value by sending them through each domain through a redirect chain. As the favicons are loaded, that bit is set to true. You may wonder, how does that data get read back by the server? The client is handled in a read-only mode, where each of those favicon requests return a 404. By watching the requests come in, the server can rebuild the unique identifier based on which favicons were requested.

When I first read about this technique, a potential catch-22 stood out as a problem. How would the server know whether to put a new connection into a read mode, or a write mode? It seems, at first glance, like this would defeat the whole scheme. The researchers behind favicon fingerprinting have an elegant solution for this. The favicon for a single fixed domain works as a single bit flag, indicating whether this is a new or returning user. If the browser requests that favicon, it is new, and can be funneled through the identifier writing process. If the initial favicon isn’t requested, then it should be treated as a return visit, and the ID can be read. Overall, it’s a fiendishly clever way to track users, particularly since it can even work across incognito mode. Expect browsers to address this quickly. The first step will be to sandbox cached favicons in incognito mode, but the paper calls out a few other possible solutions.

Orbit Fox

The long-standing pattern in WordPress security is that WordPress itself is fairly bulletproof, but many of the popular plugins have serious security problems just waiting to be found. The plugin of the week this time is Orbit Fox, with over 400,000 active installs. This plugin provides multiple features, one being a user registration form. The problem is a lack of server-side validation of the form response. On a site with such a form, all it takes is to tweak the response data so user_role is now set to “administrator”. Thankfully, this vulnerability is only exposed when using a combination of plugins, and the problem was disclosed and patched back in December.

Patch Tuesday

Another patch Tuesday has come and gone, and the Zero Day Initiative has us covered with an overview of what got fixed. There are two patched vulnerabilities that are noteworthy this month. The first is CVE-2021-1647, a flaw in Microsoft Defender. This one has been observed in-the-wild, and is a RCE exploit. If your machine is always online, you probably got this patch automatically, since Windows Defender has its own automatic update system.

The second interesting problem is CVE-2021-1648. This vulnerability is the result of a flubbed fix for an earlier problem. From Google’s Project Zero: “The only difference between CVE-2020-0986 is that for CVE-2020-0986 the attacker sent a pointer and now the attacker sends an offset.” Thankfully this is just an elevation of privilege flaw, and has now been properly fixed, as far as we can tell.

Hacking Linux, Hollywood Style

Hacking Hollywood style occasionally works. You know the movies, where an “elite hacker” frantickly mashes on a keyboard while blathering nonsense about firewalls and reconfiguring the retro-encabulator. Sometimes, though, keyboard mashing actually finds security problems. In this case, a screensaver lock can be broken by typing rapidly on a physical keyboard and the virtual on-screen keyboard at the same time. The text from the bug report is golden: “A few weeks ago, my kids wanted to hack my linux desktop, so they typed and clicked everywhere, while I was standing behind them looking at them play… when the screensaver core dumped and they actually hacked their way in! wow, those little hackers…”

Zoom Unmasking

Imagine with me for a moment, that you work for Evilcorp. You’re on a Zoom call, and the evil master plan is being spelled out. You’re a decent human being, so you start a screen recorder to get a copy of the evidence. You’ve got the proof, now to send it off to the right people. But first, take a moment to think about anonymity. When your recording hits the nightly news, will Evilcorp be able to figure out that it was you that leaked the meeting? Yes, most likely.

There is a whole field of study around the embedding, detecting, and mitigating of identifying information in audio, video, and still images. This discipline is known as steganography, and fun fact, it’s named after the trio of books, “Steganographia“. The first written in 1499, Steganographia appears to be mystical texts about how to communicate over the distances via supernatural spirits. In a very satisfying twist, the books are actually a guide to hiding encrypted messages in written texts, the whole thing hidden using the techniques described in the hidden text. For our purposes, we could divide the field of steganography into two broad categories. Intentionally hidden data, and unintentionally included indicators.

At the top of the list of intentional data is Zoom’s watermarking feature. The call administrator can enable these, which includes unique identifiers in the the call audio, video, or both. The video watermarks are pretty easy to spot, your username overlayed onto the image. There’s no guarantee that there isn’t also sneakier watermarking being done. Next to consider is metadata. Particularly if you pulled out a cellphone to make the recording, that file almost certainly has time and location data baked into it. A GPS coordinate makes for quite the easy identifier, when it points right at your house.

Unintentional steganography could be as simple as having your self-view camera highlighted at the top of the call. There are more subtle ways to pull data out of a recording, like looking at the latency of individual call members. If speaker B is a few milliseconds ahead in the leaked version, compared to all the others, then the leaker must be physically close to that call member. It’s extremely hard to cover all your bases when it comes to anonymizing media, and we’ve just scratched the surface here.

Post a Comment